Using Intel MPI

Intel MPI

Intel MPI is Intel’s implementation of the Message Passing Interface(MPI) standard, which is a very common requirment in parallel HPC jobs. Intel MPI has various tunings and features that make it attactive to many HPC users, so it is included “out-of-the-box” with CloudyCluster!

Enabling Intel MPI in your HPC job scripts is not difficult, and the following instructions outline the process:

Note: To skip the tutorial and go straight to the instructions for using Intel MPI, go to the bottom of this page.

Preparing the Job

Once your CloudyCluster environment is created, access the login node, then copy the sample job code to:

$ cp -R /software/samplejobs /mnt/orangefs/

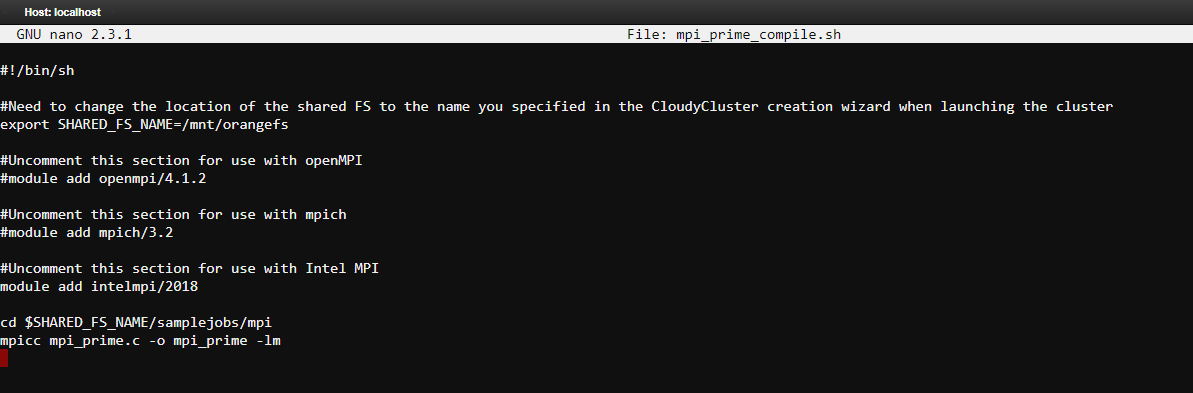

Edit the job script mpi_prime_compile.sh with

$ nano /mnt/orangefs/samplejobs/mpi/GCP/mpi_prime_compile.sh

to specify the version of MPI you want to use to compile the MPI Prime job. The edited script should look like this:

The next step is to run the compile script:

$ cd /mnt/orangefs/samplejobs/mpi/GCP/

$ sh mpi_prime_compile.sh

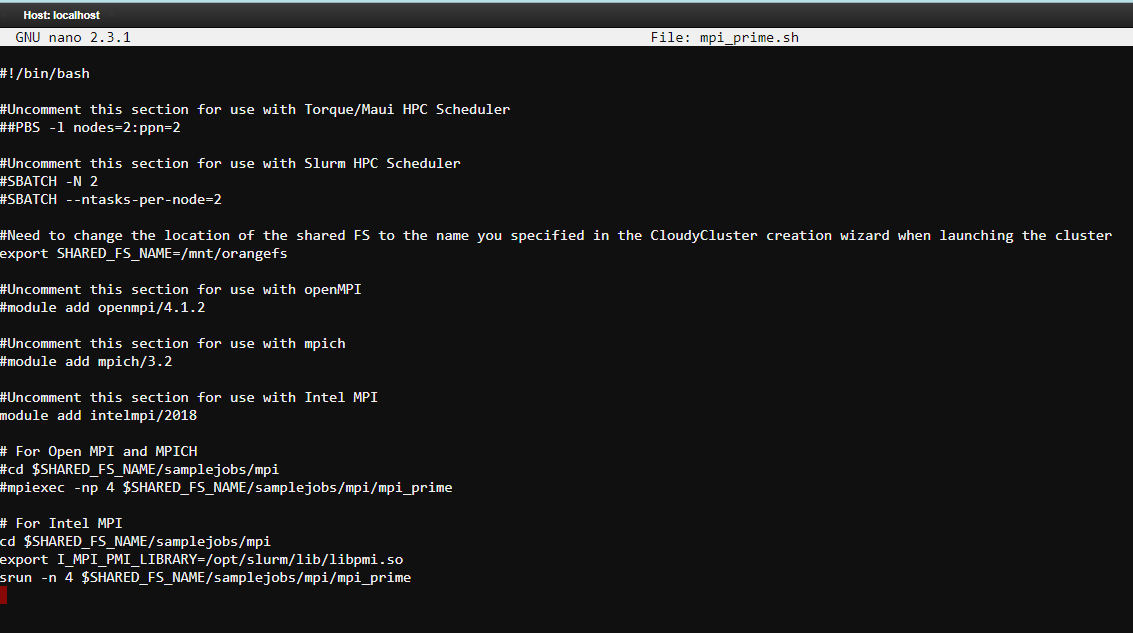

Edit the job script mpi_prime.sh with

$ nano /mnt/orangefs/samplejobs/mpi/GCP/mpi_prime.sh

to specify the Scheduler and the Compute Group nodes to be used. Pay close attention to the lines that are commented out. If you decide to use Slurm, the edited script should look like this:

If you renamed the OrangeFS node during setup, you will need to update this section to match the name you created.

#Need to change the location of the shared FS to the name you specified in the CloudyCluster creation wizard when launching the cluster

export SHARED_FS_NAME=/mnt/orangefs

Save, exit, and submit the mpi_prime.sh script:

$ ccqsub mpi_prime.sh

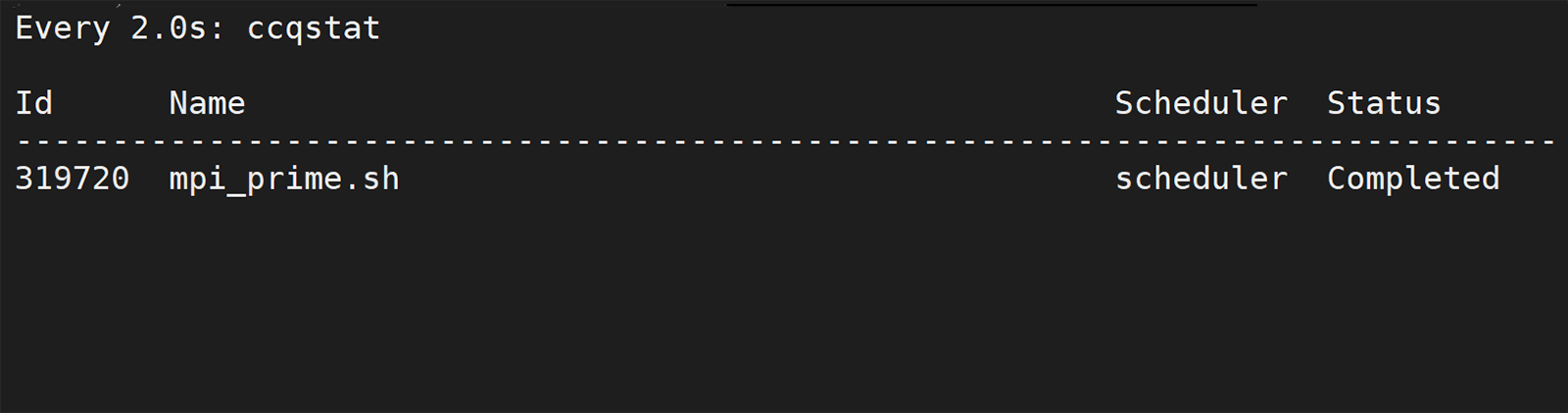

What to Expect Next

If CCQ validates the job is in order, you will get an immediate confirmation message containing a Job ID number associated with your submission.

$ ccqsub mpi_prime.sh

The job has successfully been submitted to the scheduler scheduler and is currently being processed. The job id is: 319720 you can use this id to look up the job status using the ccqstat utility.

As noted in the message above, you may track the status of your job by using the CCQStat utility $ ccqstat for a snapshot of the jobs at that moment, or $ watch ccqstat for a more real-time visual notification of the jobs as they pass from state to state until completion.

Job Output

Once the job completes, CCQStat will give a Status of Completed:

$ nano mpi_prime.sh«job_id».o

Using 4 tasks to scan 25000000 numbers

Done. Largest prime is 24999983 Total primes 1565927

Wallclock time elapsed: 4.05 seconds

Errors, if there were any, would be located in that same directory in the mpi_prime.sh«job_id».e file.

Summary

Congradulations on your successful use of Intel MPI within CloudyCluster! To review, here are the steps to use Intel MPI in your HPC job:

- Add the Intel MPI module in your compile script:

module add intelmpi/2018

- Compile your MPI program:

$ sh <compile_script>

- Add the Intel MPI module in your job script:

module add intelmpi/2018

- Important: Use srun to call Intel MPI in your job script, NOT mpiexec:

srun -n ….

- Submit your job with ccqsub!

$ ccqsub <job_script>